How to Secure and Harden Splunk Enterprise

The following blog posting provides guidance on steps that can be taken to secure and harden Splunk environments. Many of the security feature essentially follow security best practices, while others would probably only be implemented if there was a business or regulatory need to do so.

Securing Data Communication

Encrypt communication between Splunk components

- What this is: SSL encryption of data communication between Splunk components (e.g. Search heads, indexers, deployment servers and forwarders) over port 8089.

- When to implement: This is implemented by default and the recommendation is to leave it enabled.

- Points to note: In environments with a lot of indexers (e.g. 30+ and beyond), performance of searches can be impacted.

- For more information, click here

Encrypt communication from browsers to Splunk Web

- What this is: SSL encryption of Splunk web user sessions. This would commonly be the search head, but could also be other components where Splunk web is running.

- When to implement: It would be considered best practice to implement on your search heads as a minimum.

- Points to note: You can either use self-signed certificates or implement your own SSL certificate.

- For more information, click here

Encrypt communication from forwarders to indexers

- What this is: SSL encryption of the log data flowing from the Splunk forwarders to the Splunk indexers.

- When to implement: Best practice would be to enable such that potentially sensitive data is encrypted over the network. There may be some more sensitive endpoints where you enabled it vs. others. Additionally, regulatory or business requirements may mandate this.

- Points to note: If you have a mix of SSL enabled and non-SSL enabled forwarders, they will need to send to separate ports on the indexer (e.g. 9997 for non-SSL and 9998 for SSL). There may be some small performance impact at index time, depending on data volumes and the amount of indexers you have.

- For more information, click here

Securing Against Data Loss or Modification

Enable Indexer Acknowledgement

- What this is: Used for ensuring delivery of data from the forwarder to the indexer. When enabled, the forwarder will resend any data not acknowledged as “received” by the indexer.

- When to implement: If you really want to guarantee every event will get to the indexer and protect against in-flight data loss caused by things like network loss, indexers going down and timeouts.

- Points to note: Expect this to have some performance impact and introduce the possibility of event duplication. Also ensure the forwarder queue size is sufficient to cache data in the event that data cannot get to the indexers.

- For more information, click here

Enable Event and IT Data Block Signing

- What this is: IT events, audit events and archives can be cryptographically signed to help detect any modification or tampering of the underlying data

- When to implement: This level of security may be needed to meet regulatory requirements, or if you are storing particularly sensitive data, or simply want reassurance that logs have not been tampered with.

- Points to note: Expect a fairly high indexing performance cost when implementing these features. Signing cannot currently be used in a clustered setup, nor is it supported for distributed search, so it’s usefulness may be somewhat limited. The less secure Event Hashing feature can be leveraged when signing cannot be used.

- For more information, click here

Enable Event Hashing

- What this is: Event hashing provides a lightweight way to detect if events have been tampered with between index time and search time. Event Hashes are not cryptograpically secure like IT data block signing.

- When to implement: Use when you do not have the capability to run the IT data block signing feature.

- Points to note: Individual event hashing is more resource intensive than data block signing and someone could tamper with an event if they obtain physical access to the back-end file system.

- For more information, click here

Securing Data Access

Integrate with existing Authentication and IDM controls

- What this is: Integration of Splunk with existing identity management systems in your business (e.g. LDAP, SSO, 2-Factor/Radius), to meet company policies and requirements.

- When to implement: You should consider implementing this where possible. The default local authentication adds additional management overhead and risk versus integrating with existing well-established identity management systems in your company.

- Points to note: There are many out of the box common integrations, such as LDAP. For others, some programming may be required.

- For more information, click here

Restrict Access using Roles

- What this is: Implementation of roles within Splunk, to restrict access to only the data that users of those roles need.

- When to implement: Implement when you need to restrict access to data in Splunk – which should probably be most cases. It is best practice to take a least privilege approach to access, so please don’t give everyone admin rights…

- Points to Note: Plan out your role-based access carefully. Think about all the individuals that will need access and design a role based approach that will scale and can be managed effectively.

- For more information, click here

Remove Inactive Users

- What this is: Identify and remove all users that have not logged into Splunk for xx days, all users that may have changed roles and no longer require Splunk access and all users that have left the company.

- When to implement: It would be considered best practice to implement these types of controls and in most cases, a policy like this should exist within your company.

- Points to note: You can have Splunk alert on user activity and/or integrate Splunk with your Identity Management (IDM) systems within your company.

Anonymize / Mask Sensitive Data

- What this is: Splunk is able to anonymize or mask sensitive data in events, such as credit card numbers or social security numbers, to prevent this data from being indexed and stored in Splunk.

- When to implement: Useful when you have sensitive data in your log events that you really do not want being indexed into Splunk or made searchable by users.

- Points to note: This is handled through the use of regex or sed scripts when the data hits Splunk. A better approach might be to see if you can configure logging such that sensitive data does not get into the logs in the first place, but this is not always possible.

- For more information, click here

Securing Other Areas

Stop Splunk Web when not in use

- What this is: Splunk Web is typically enabled by default on many components such as indexers, search heads, deployment servers and even heavy forwarders. However, most of the time, it only really needs to be enabled on the search head.

- When to implement: Stop Splunk web on components other than the search head that are not regularly logged into. When you want to use the web front-end of a particular component, simply start it up.

- Points to note: This is more of a best practice than anything else – why essentially run a web server if not needed?

- For more information, click here

Change the default username/password (on the Forwarders too…)

- What this is: The default username/password for all Splunk components is admin/changeme. It goes without saying that a username and password that everyone knows is not terribly secure!

- When to implement: Always. When logging into Splunk for the first time via Splunk Web, the admin user is required to change the password. However, this is not the case on Splunk forwarders. All Splunk components, including the forwarder, can be interacted with using the REST API.

- Points to note: Any endpoints where Splunk is installed with the default username/password could potentially be open to malicious activity. If required, it is possible to script a password change via the deployment server to bulk change all Splunk forwarder passwords.

- For more information, click here

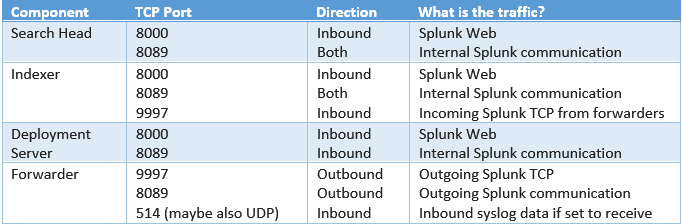

Implement appropriate firewall/port rules

- What this is: Splunk uses a number of ports and directions for its various components.

- When to implement: Always. It is best practice to only open up these ports/directions rather than allowing all directions and ports.

- Points to note: The following table lists the core firewall rules required by default Splunk configurations and the direction required. If you listen/send data on other ports, then you will need to implement those rules as well.

Avoid running Splunk as Root

- What this is: It is not good security practice to run software as the root user and Splunk does not need to be run as root.

- When to implement: In most production circumstances, you would likely not want to run Splunk as root.

- Points to note: When running as a non-root user, ensure the correct permissions are assigned to read log files, write to the splunk directory, execute needed scripts and bind to appropriate network ports.

- For more information, click here

Do not use Splunk Free in a Production Environment

- What this is: Splunk Free is a free version of Splunk that the 30 day Enterprise trial defaults to after the 30 days are up. However, the free version of Splunk is also free of any security.

- When to implement: Always – Splunk Free is not designed to be used in a production environment. The lack of security in the free version could, in theory, allow any machine readable file on the endpoint where Splunk Free is installed to be read.

- Points to note: Splunk free is good for spinning up and down to test things, but developers should get a developer license and businesses should purchase an enterprise license. If you are evaluating Splunk and your 30 days are up, contact Splunk to get an extension.

- Free vs. Enterprise Comparison

Looking to expedite your success with Splunk? Click here to view our Splunk service offerings.

© Discovered Intelligence Inc., 2014. Do More with your Big Data™. Unauthorised use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to Discovered Intelligence, with appropriate and specific direction (i.e. a linked URL) to this original content.